Blog

Navigating the AI Dust Storm: A guide for publishers

The past months we have experienced a Dust Storm in the tech landscape caused by an explosion of initiatives, launches and innovation in the space of Generative AI.

The rapid pace of innovation has become overwhelming. With the launch of this bi-weekly “AI Dust Storm” series we aim to be your guide by providing the information and resources to stay on top of the latest developments in AI. We help you understand the impact of Generative AI on journalism and the news business.

Every two weeks we will:

- Share tools and resources to kickstart or accelerate your Generative AI experiments

- Find pathways and highlight the most important developments in the previous 2 weeks

- Share the opinion of industry experts

Today we are kicking off this AI Dust Storm series with a few key concepts and tools to get you started.

Key Generative AI Concepts you should know

Last Friday, a team of Twipe has organised an AI Bootcamp to better understand Generative AI and explore what can be opportunities for our customers. Key concepts and tools were unpacked and demystified for the understanding of the broader team.

1. Large Language Models

Large Language Models are deep learning models that can process and generate human-like language. Large Language Models learn to predict the probability of the next word in a sentence based on the previous words. Language models are used in many applications that require natural language processing, such as:

- Chatbots that can respond to user queries

- Content Creation Tools that can generate human-like text

- Code generation and evaluation

The model’s performance depends on various hyperparameters, such as learning rate, batch size, and number of layers, which need to be tuned to optimize the model. A larger number of parameters implies better performance.

2. Temperature

Temperature is a parameter that some models use to govern the randomness and “creativity” of the responses. It is always a number between 0 and 1. A temperature of 0 means the responses will be very straightforward and in line with expectations (meaning a query will likely generate always the same response). A temperature of 1 means the responses can be more unexpected and thus vary wildly. A temperature between 0.7 and 0.9 seems to be the best performing for creative tasks.

3. Training Data Set

Large Language Models are trained on a large dataset of text, such as books or articles, to learn the patterns and structure of language. The data is pre-processed to extract features and transformed into a numerical format that the model can process.

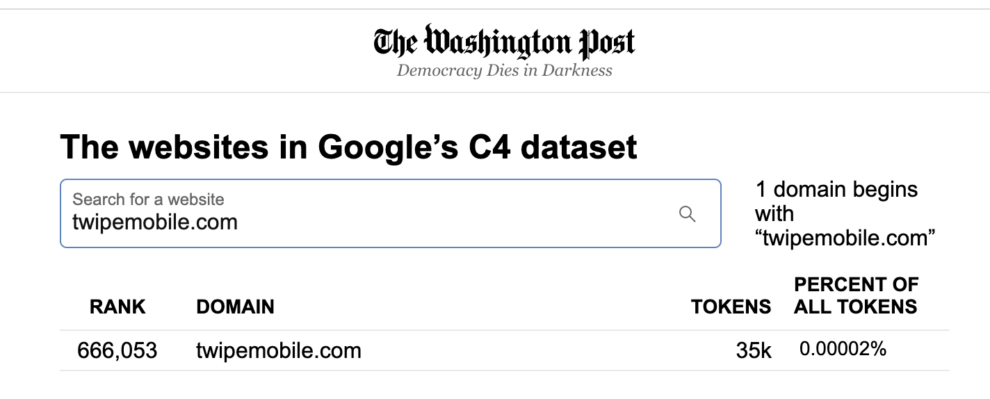

The Washington Post recently published an inventory of the websites in the dataset used to train the Google C4 model. Interestingly our Twipe website has also been part of this data set. Most likely you will find also find yours.

4. Prompting

Prompting is how humans can talk to AIs. It is a way to tell the AI what we want and how you want it. It is usually done with words but it can also be done in code. Because AIs understand words differently than we do, prompt engineering has become an increasingly demanding skill. This is the process of designing and creating prompts for AI models to train them to perform specific tasks.

In a Washington Post article experts in the field argue that the early weirdness of chatbots is actually a failure of the human imagination. Prompt engineering solves this problem by having humans who know how to give the machine the right advice. At advanced levels, the dialogues between prompt engineers and machines look like logic puzzles with twisting narratives of requests and responses.

“The hottest new programming language is English,”

Andrej Karpathy, Tesla’s former chief of AI

Some easy practical tips to up your prompting game have been collected in this Tweet by Rowan Cheung. For a comprehensive prompting skills course the place to go to is LearnPrompting.org.

5. Open AI and other Generative AI Tools

OpenAI provides various pre-trained AI models and APIs that can be used for text, image, and other data processing tasks. These APIs can be used to generate text, perform language translation, analyze sentiment, and more. Examples of OpenAI APIs include the widely known GPT-3 and 4 as well as the image generation model DALL-E.

The advantage of using OpenAI APIs compared to using the ChatGPT interface is the degree of flexibility you get in setting up the models parameters and creating applications tailored to your use cases.

While Open AI has become the most popular hub for accessing the latest evolutions in generative AI, there are various other tools out there. Producthunt compiled a list of 60. Probably the most comprehensive overview of Generative AI Tools available for publishers and media organizations has been curated by Digital Consultant and Investor Andy Evans.

6. Auto GPT

Auto-GPT is an experimental open-source application that demonstrates the advanced capabilities of the GPT-4 language model. This software employs GPT-4 to connect together LLM “thoughts” in order to achieve any given objective independently.

Auto-GPT represents one of the earliest instances of what we could call “AI Agents”, fully autonomous AIs that are spawned to perform highly specific tasks, thus pushing the boundaries of what is achievable with artificial intelligence.

As an open-source project, one of the fastest growing in Github, Auto-GPT is continuously being improved and expanded by a community of 3000+ developers. But what makes it extremely powerful is the possibility to expand the code with specific commands and by plugging in third-party APIs to perform various tasks at any given company.

7. Hugging Face

Hugging Face is an open source project that allows to locally download multiple LLM models and provides datasets to train them. It also provides courses and educational materials. Hugging Face launched an open source version of ChatGPT which gives everyone the opportunity to download these models and train them on proprietary data.

Essential Generative Toolbox for Publishers

If there is one consensus among leaders across the industry regarding Generative AI, is that we must experiment with it to be able to understand what it represents.

There are a variety of tools out there, but these are the must-haves for everyone who wants to explore the use cases of Generative AI:

- Text: GPT-3 and 4 General purpose chat interfaces and API models

- Image: DALL- E and Midjourney

- Video: Eleven Labs

- Code: OpenAI Codex

- Every other tool you can think of

- Check the website “There’s an AI for that”

- Great collection of bookmarks at Raindrop.io

4 evolutions from the past 2 weeks

Generative AI has seen remarkable progress in recent months, thanks in part to its open-source and collaborative nature. As more researchers and developers share their work and insights, the field is growing exponentially, opening up new possibilities for creativity and innovation.

For news publishers these can translate in new product innovation sparked by these models or empowering non technical people and entrepreneurs to develop websites and software at breakneck speed. Additionally using AutoGPT – AI Agents and Personal Assistants can become part of the daily flow to enable process Automation and 10x productivity leverage. Some examples were highlighted last month in our own blog.

GPT4 introduces Code Interpreter

OpenAI has recently introduced a new function called “Code Interpreter” that allows users to upload and download files such as tables or code and use GPT-4 to evaluate, modify, and save them locally.

The tool can also perform data analysis, produce charts, and even browse the internet to gather information. This opens up exciting new possibilities for programmers and data analysts who want to automate mundane tasks and focus on more creative work. With GPT-4’s ability to understand natural language and generate code, the Code Interpreter function is a powerful tool that can significantly speed up the coding and data analysis process.

By leveraging the power of generative AI, developers can now achieve even more efficient workflows and make faster progress in their projects.

Ads & movie trailers created entirely AI

Christian Fleischer used RunwayML and ElevenLabs to generate a Baz Lurhmann style movie teaser. It took 500+ shots generated with RunwayML to get 65 shots that made it into the movie 5000 credits used to generate 3 custom voices with ElevenLabs Script co-created with #ChatGPT.

A similar experiment to create an entirely AI generated story based on the prompt: “Write a short story, in first person, about a girl moves to the big city, launches a business, overcomes challenges, and finally succeeds. 300 words” has received quite some commentary about the dullness of the storyline and creativity of the outcome. Still a very impressive result.

Meta’s new uber realistic avatars

Meta launched Blimey, a new life-like AI avatar system which Theo Presley argues looks “even more expressive than the real Zuck”. A new step in the mix between reality and virtuality.

An interesting evolution that could for example be picked up by companies using customer service chatbots to be coupled with this type of technologies.

200 $ saved by AI

CEO of DoNotPay, Joshua Browder, recently shared his experience of outsourcing his entire personal financial life to GPT-4 through the DoNotPay chat. By giving AutoGPT access to his bank accounts, financial statements, credit reports, and email, he was able to save money in some fascinating ways.

The bots identified and cancelled useless subscriptions worth $80.86 per month, drafted a legal letter and got a refund of $36.99 from United Airlines, and negotiated a $100 discount on a Comcast bill. Joshua’s goal is to make $10,000 with the help of GPT-4.

His story highlights how generative AI like GPT-4 can be used to save money and handle mundane tasks that humans would normally do, freeing up time for more important things.

Other Blog Posts

Stay on top of the game

Subscribe to Twipe’s weekly newsletter to receive industry insights, case studies, and event invitations.

"(Required)" indicates required fields