Navigating the AI Dust Storm: The race for AI search engines and the question of AI ethics

As our second instalment in the “AI Dust Storm” series, we continue to bring insights into the rapidly changing world of Generative AI, hoping to distill the most valuable of updates and resources in a manageable and accessible way.

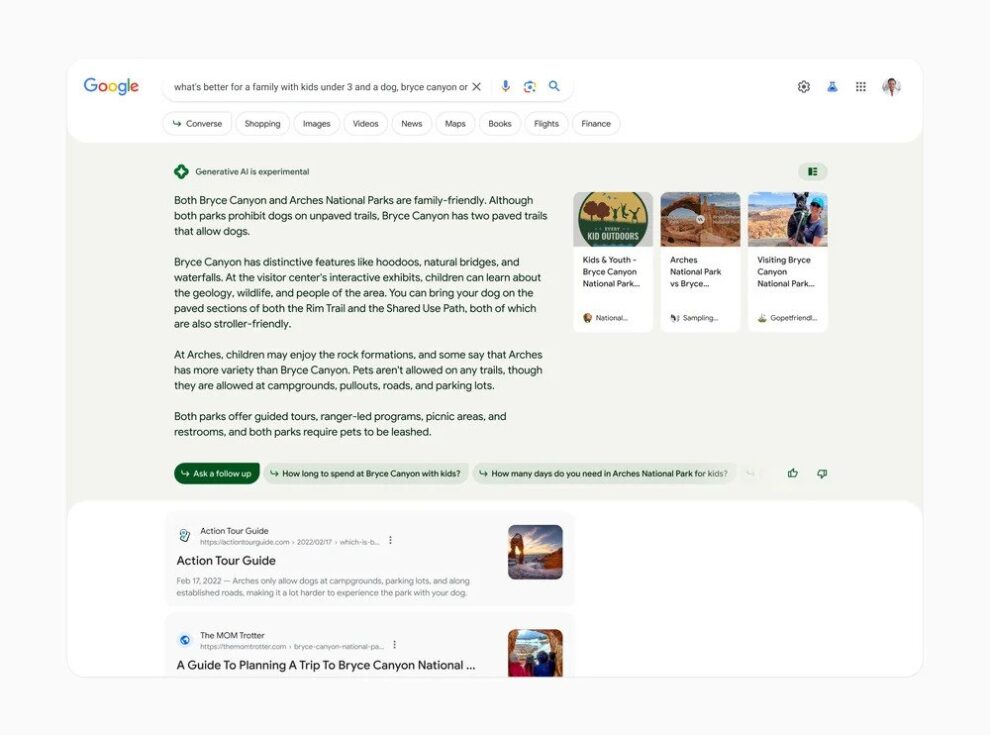

Perhaps the biggest event of this past fortnight has been the development surrounding Google, specifically at its Google I/O event. With Microsoft proclaiming the integration of OpenAI‘s GPT capabilities within its search-engine Bing, it was no secret that Google would be close behind.

What do new AI search engines mean for publishers?

Nieman Lab has delved into this point, understanding that this may not be an entirely positive result for publishers. Google’s ability to scrape information and deliver it on search without users actually visiting the sources of this information, has caused for loss of traffic and loss of advertising money for years and.

But Google’s AI Integrated search brings this to the next level. This search is purported to allow for natural language interaction within the search, delivering answers, backed by sources, in a well structured, natural-language response. The Washington Post published a guide to the new Google search.

This has the potential to exacerbate the problem that many (including award winning television series) have described of Google: those searching for information may stop their journey on a page hosted by Google.

This potential drop off of referral traffic, could spell further considerable loss for publishers who depend on advertising and traffic brought to their sites. Additionally, a concern is that Google’s AI recommendation could prefer information and content from major, large outlets; thereby decreasing the opportunity for smaller, less established outlets to get their foot in the door.

A new feature that is also being expanded is that of “Perspectives”. This feature highlights user generated content, such as reddit posts, or Quora questions. This is a bright spot to counter the fears of individuals being drowned out by content or views that are not shared by major outlets. However, how this will play out in reality, remains to be seen.

Experiments widening the world of AI

Hundreds of cases of curious tech enthusiast pushing the limits of Generative AI are published every day. Here are 3 that stood out to us from the evolutions of past 2 weeks.

Giving Eyes to GPT4

Pairing the power of GPT4 with eye-tracking technology, we have seen that using the technology in this way allows for the AI to detect and process the focus of a user’s attention and interact more directly with visual material. The potential application of this is obviously wearable technology, similar to the, now defunct, Google Glass. The integration into wearable technology could allow users to interact with AI in the real world, potentially acting as an extension of the user themselves

Legal action against spread of fake news

While it will be of no surprise that ChatGPT has made the creation of fake news and its dissemination easier, we have yet to see action taken against those using the technology. However, in China, a man has recently been arrested for using ChatGPT to spread fake news over a train crash. The accused was able to evade the systems in place to prevent the spread of misinformation by using ChatGPT to rephrase the same article in order to surpass the duplication checks on the platform. As the ability to detect fake news already presents a significant challenge, the proliferation of AI assistance only makes that job more difficult.

BacterAI: Autonomous science experiments

With little to no research having been conducting on approximately 90% of bacteria, an AI project from the University of Michigan in Ann Arbor is using robots integrated with AI to conduct up to 10,000 autonomous experiments per day. With the great amount of hypothetical ideas being suggested of the recent AI upswing, this application provides positive real world use. Likely to speed up the mapping and understanding of biomes and bacteria in the fields of medicine and agriculture. BacterAI, as it is called, shows significant promise.

The discussion on AI ethics

The topic of ethics has only continued to pick up steam in the AI discussion while the letter initiated by Future of Life demanding a slow down in the AI development space has reached 27.000 signatures from people worldwide including technology experts.

Nicholas Thompson, CEO of The Atlantic, discussed, in his The most interesting thing in tech series, the approach to ethics that the AI company Anthropic has decided to implement. Anthropic has used the Universal Declaration of Human Rights, and Apple‘s privacy policies to guide the use of their AI. As Nicholas Thompson rightful assesses, this is a great move towards showing a concrete stance toward putting ethics at the forefront of AI initiative and discovery.

However, several questions are raised by this action. Firstly, are these the right principles? As we have already seen within the AI space, the guidelines and ethics that are meant to steer the direction of this space is flexible; flexible to individual’s own ethics and flexible to the understanding of the capabilities and uses of the technologies. As a result, a flexible set of ethics this early in the AI game seems necessary. That being said, allowing significant flexibility to these guidelines can defeat the purpose of having them at all as people flex the ethics to their own will, drawing the line just beyond what they are willing to do.

Secondly, are AI’s capable of understanding these guidelines? If not, well the ramifications are clear and the value of having such principles becomes moot.

Thirdly, are these principles valuable if not adopted by the industry? If these principles act as a limitation to the capability of Anthropic, seeing them being passed by other companies will see the AI world view these ethical principals as getting in the way of their success. Additionally, without a collective constitution, the industry will have to deal with competing ethical guidelines. Potentially causing users to shop around ideas to find the AI that will allow their idea.

Other Blog Posts

Stay on top of the game

Join our community of industry leaders. Get insights, best practices, case studies, and access to our events.

"(Required)" indicates required fields