Industry news

Ethics, AI, and the Future of News: Reflections on ChatGPT’s Anniversary

In the span of a year, ‘Artificial Intelligence’ has gone from being a tech buzzword to one used in everyday speech, even becoming Collins Dictionary’s Word of the Year. Its meteoric rise in the public’s consciousness reflects more than just a trend—it marks the start of a shift in how companies operate. This has certainly been the case for those in the news industry who have experimented with AI technologies and are impacted by the ethical dilemmas it brings.

As we stand on the cusp of the one-year anniversary of ChatGPT—the AI platform that arguably brought AI to the limelight for the general public—this blog will take the opportunity to look back on where the news industry was in regard to AI before 2023, explore how ChatGPT has reshaped the newsroom, and discuss the ethical implications of its use in the news industry.

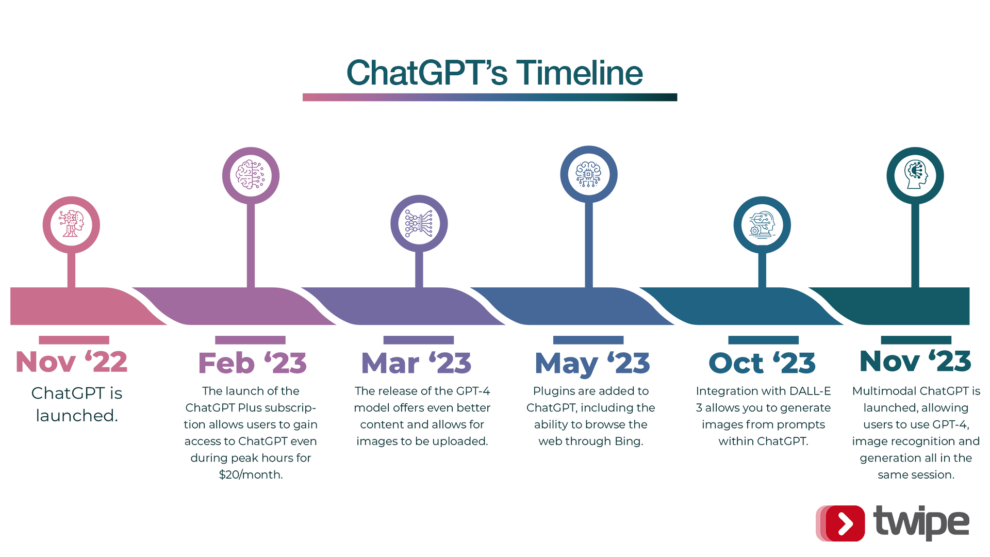

A year of ChatGPT: the timeline

In the year since its release, ChatGPT has undergone several updates:

- November 2022: ChatGPT is launched.

- February 2023: The launch of the ChatGPT Plus subscription allows users to gain access to ChatGPT even during peak hours for $20/month.

- March 2023: The release of the GPT-4 model offers even better content and allows for images to be uploaded.

- May 2023: Plugins are added to ChatGPT, including the ability to browse the web.

- September 2023: Updates to the database from September 2021 to January 2022 grant access to more recent information.

- October 2023: Integration with DALL-E 3 allows you to generate images from prompts within ChatGPT.

- November 2023: Multimodal ChatGPT is launched, allowing users to use GPT-4, image recognition, DALL-E 3 and code interpreter all in the same session.

Is this story capturing your attention?

"(Required)" indicates required fields

How did newsrooms use AI before ChatGPT?

News organizations were already tinkering with AI long before it became a buzzword. A study by the London School of Economics (LSE) across 32 countries and 71 newsrooms sought to understand how AI was incorporated into the newsroom back in 2019. It found that nearly half of the respondents leveraged AI for newsgathering, two-thirds for production, and just over half for distribution.

But what exactly did the respondents consider AI in 2019? The study highlighted that definitions varied significantly across different respondents—from tools that automated tasks, to technologies based on machine learning or chatbots. Ultimately, the varied understanding of AI underscored the diversity of its uses. For instance, in practice, AI applications in the 2019 newsroom ranged from scraping social media for insights to aiding investigative journalists in sifting through extensive data sets and documents. It also played a role in automating simple content creation, managing paywalls, and personalizing content.

Regardless of how it was used, the motivations for embracing AI were clear:

- 68% of newsrooms aimed to bolster journalistic efficiency,

- 45% sought to deliver content with higher relevance to users, and

- 18% aimed at improving business processes.

How ChatGPT changed the newsroom

Today’s newsrooms are increasingly shaped by AI, with tools like ChatGPT streamlining content generation. One can observe a stark uptick in adoption: in 2023, a staggering 90% of newsrooms that utilize AI employ these tools for content creation, a notable rise from 75% in 2019. Use cases of AI content generation include:

- AI-driven article drafting, such as Klara Indernach for Express.de,

- using DALL-E 3’s ability to craft accompanying imagery,

- enhancing fact-checking,

- augmenting visual storytelling, and

- boosting audience interaction, to name just a few.

Beyond production, AI is also redefining content curation. News publishers, such as The Times, have used AI to create custom news quizzes while others, including Belgian public broadcaster VRT, have used it to generate tailored content recommendations. This is in line with the 2023 LSE study which found that 80% of AI-adopting newsrooms leverage these technologies to enhance news distribution, a significant leap from the 50% recorded in 2019.

How the job of a journalist has changed with ChatGPT

As the previous section illustrated, AI tools like ChatGPT are playing an increasingly important role in the newsroom. Journalists like Farhad Manjoo testify to this paradigm shift, as AI can complete trivial tasks such as helping with word choices and content summarization. However, Burt Herman from NiemanLab also emphasizes that journalists are essential in the newsroom as AI becomes more mainstream. More than ever, he argues, it is important for journalists to apply their ethical standards and critical thinking to guide and evaluate the technology’s influence.

Indeed, the role of journalists has expanded beyond simply using AI tools effectively. Journalists’ responsibilities now include questioning the ethical and moral implications of using AI. They are responsible for setting standards for its use and tracking its potential abuses. They must maintain human oversight and control over AI to safeguard against the spread of misinformation. Journalists now serve as both watchdogs and interpreters of AI’s capabilities, ensuring the public is informed about the potential impacts on society.

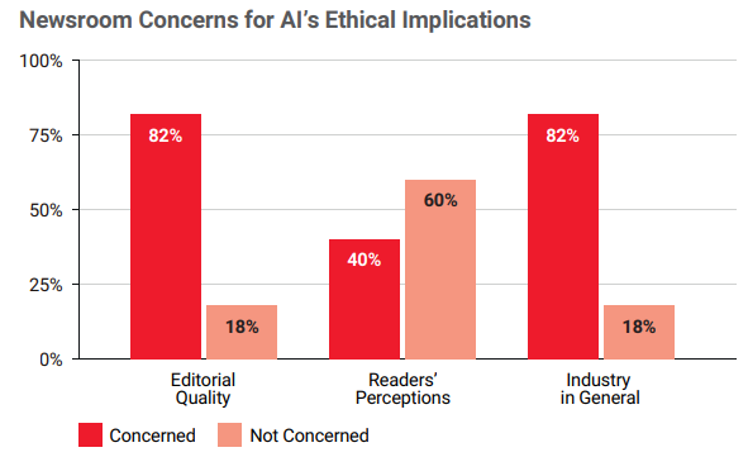

Ethical concerns and editorial policies

As news organizations contend with AI’s influence on editorial content and industry commercialization, ethics are at the forefront of newsrooms’ concerns. A 2023 LSE study highlights that 82% of news organizations have drafted guidelines to address these challenges, focusing on ethical impact, legal issues, and industry dynamics.

The BBC and the Associated Press are prime examples of news organizations experimenting with generative AI. While they appreciate its benefits, they remain vigilant about ethical implications like misinformation and bias, emphasizing the need for trust, social responsibility, and human oversight in journalism. They are both actively implementing measures to safeguard their content and intellectual property from AI misuse. This involves setting strict guidelines for AI’s role in journalism, ensuring human editing, and upholding journalistic standards.

The consensus among newsrooms is clear: there is a need for continuous assessment of AI’s role in journalism. Regular evaluations ensure that AI’s integration aligns with ethical practices and enhances journalistic quality.

A year in: What conclusion can we draw?

As we commemorate the first anniversary of ChatGPT, most can appreciate how AI has transformed newsrooms by streamlining content generation and distribution. However, it has also brought about ethical dilemmas, legal challenges, and changes in journalistic practices. Journalists are now not only content creators but also ethical overseers and interpreters of AI-generated content. The future requires a balance between technological innovation and maintaining the core values of journalism.

Did you find this story interesting?

"(Required)" indicates required fields

Other Blog Posts

Stay on top of the game

Join our community of industry leaders. Get insights, best practices, case studies, and access to our events.

"(Required)" indicates required fields